Decisions on Steroids

Welcome to the Augmented Future

In a hyper-connected digital world where 2.5 quintillion bytes of data are generated every day, making informed decisions has become both more critical and more challenging1. Traditional decision-making methods have struggled to keep up, often overwhelmed by “data overload” and influenced by human biases. This raises a crucial question: How can we extract meaningful insights with precision and accuracy while maintaining originality?

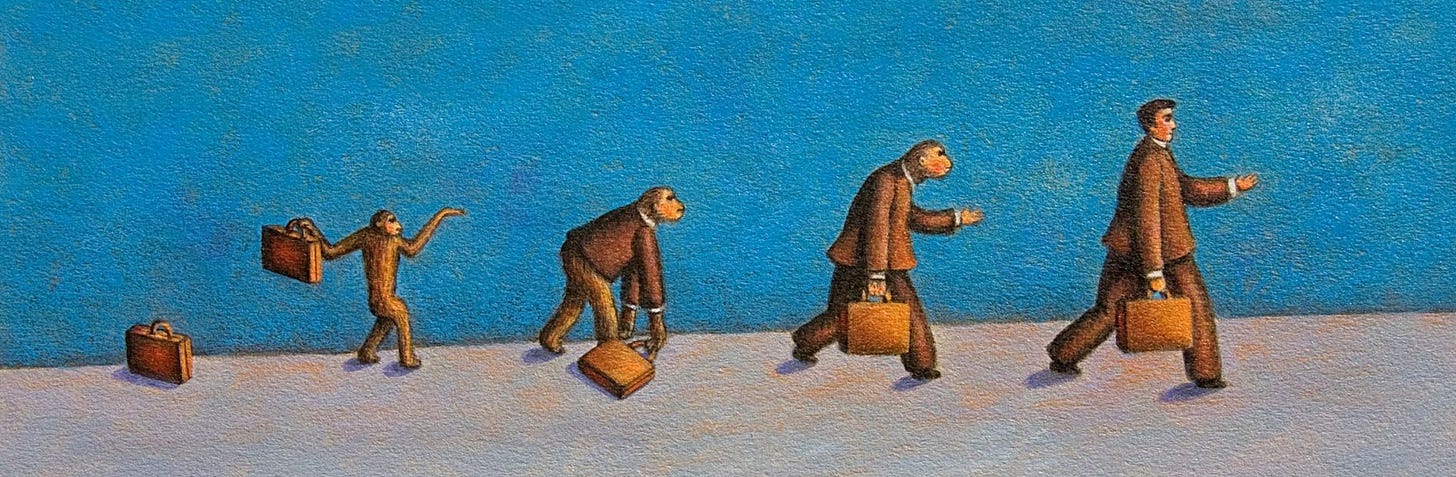

Augmented decision-making addresses this challenge by leveraging artificial intelligence to enhance rather than replace human judgment. By combining AI’s ability to process and analyze data at unprecedented speeds with the intuition and expertise of human intelligence, businesses, and executives can make faster and more effective decisions. From defense services to retail, stakeholders across sectors are increasingly adopting AI-driven applications to stay agile and competitive.

What Makes It Augmented

Before deep diving, it is essential to clarify what distinguishes augmented decision-making from its stepbrother, autonomous decision-making.

The human-in-the-loop (HITL) approach ensures human oversight in AI-driven decision-making processes, allowing for contextual understanding and alignment with ethical and corporate goals. For example, IBM Watson analyzes medical records to suggest potential diagnoses, but the final decision remains with medical professionals2.

Another defining characteristic of augmented decision-making is its ability to minimize cognitive biases by relying on data-driven evidence rather than subjective judgment. Platforms like HireVue use AI to evaluate candidates objectively, and a recent push by MIT emphasizes the importance of bias audits in AI systems3.

Effective AI tools must also manage increasingly complex decision-making scenarios, ranging from forecasting to predictive maintenance. For instance, retail giants like Amazon use AI to optimize inventory based on personalized consumer data. According to the Scaling AI report by Deloitte, organizations that scale AI effectively achieve three times the return on investment compared to their counterparts4.

These characteristics form the pillars of augmented decision-making. Unlike autonomous systems that function without human intervention, augmented decision-making seeks to enhance human judgment through a symbiotic relationship between humans and machines.

The Achilles Heel

While augmented decision-making holds immense potential, its adoption is not without challenges.

One significant obstacle is ensuring access to quality and well-integrated data. Poor data, such as incomplete or outdated datasets, can lead to analytical inaccuracies. Implementing data governance frameworks can improve decision-making accuracy by 20 to 40 percent, as reported by Gartner5.

Although AI can reduce cognitive biases, it can also amplify existing biases introduced during its development. Biases can lead to unfair outcomes, raising ethical and legal risks. Adopting explainable AI (XAI) techniques for bias audits and ensuring diversity in development teams can help mitigate this risk.

Another challenge is the cultural shift required within organizations to adopt AI systems. Resistance can stem from a lack of trust or fear of job displacement. Promoting AI literacy and maintaining transparency can ease this transition, leading to higher adoption rates.

Navigating evolving regulations also poses a significant challenge, especially when deploying AI systems across different regions. Incorporating privacy-by-design principles and engaging compliance experts early in the development process can help manage legal risks and operational complexities. Addressing these challenges with a strategic approach is crucial to unlocking the full potential of augmented decision-making.

Ingredients for Adoption

Successfully integrating AI into decision-making requires a well-thought-out approach that aligns technology with business goals, ethics, and organizational culture.

Before development, organizations should identify specific use cases where AI can enhance decision-making. This might include predictive analytics for demand forecasting or natural language processing (NLP) for customer insights. Conducting assessments and prioritizing high-impact use cases can streamline adoption.

Another ingredient, the human-in-the-loop (HITL) approach, balances AI’s analytical power with human judgment, ensuring that critical decisions retain ethical oversight. This is especially vital in regulated sectors like healthcare and finance. Designing decision workflows that incorporate AI recommendations and explainable AI (XAI) can build trust and transparency.

It is also important to avoid AI systems that function as “black boxes.” Transparent AI systems allow users to understand the reasoning behind outputs, reducing the risk of hallucinations and enhancing reliability.

The fear of job displacement and a lack of understanding of AI’s capabilities are significant barriers to adoption. Investing in AI literacy programs and change management initiatives can demystify AI, foster trust, and improve AI readiness.

By aligning AI strategies with these best practices, organizations can harness AI’s potential effectively while mitigating risks related to bias, compliance, and user adoption.

The Promised Land

One of the current barriers to AI in decision-making is its “black-box” nature, which limits stakeholders’ understanding of how decisions are made. The future of augmented decision-making lies in Explainable AI (XAI), which aims to offer understandable explanations of AI inferences.

As no-code and low-code AI platforms become more accessible, stakeholders at all levels will be able to leverage AI for decision-making. This shift is set to democratize AI, fostering a data-driven culture across organizations.

While many organizations currently use AI for predictive analytics, the next evolution is prescriptive analytics—AI systems that not only predict outcomes but also recommend actionable strategies. Advanced models capable of evaluating multiple scenarios and recommending optimal actions will redefine augmented decision-making.

Today, AI systems often act as tools that provide recommendations for stakeholders to accept, reject, or modify. However, the future will see a more seamless collaboration between humans and AI-where AI not only provides recommendations but also explains the rationale behind them, adapting to individual decision preferences.

In essence, the future of augmented decision-making is not about AI replacing humans but about enhancing human capabilities. Those who master the art of blending human intuition with AI’s data-driven insights will not only survive but thrive in a rapidly evolving landscape.

As I finish eating my leftover twice-microwaved jollof rice, it is clear that the future is not about thinking outside the box but about teaching the box to think with you.

Domo. (2022). Data Never Sleeps 10.0. Retrieved from ~[https://web-assets.domo.com/miyagi/images/product/product-feature-22-data-never-sleeps-10.png](https://web-assets.domo.com/miyagi/images/product/product-feature-22-data-never-sleeps-10.png)

McKinsey & Company (2021): "Achieving AI’s full potential: How companies are using AI to enhance human decision-making"

MIT Media Lab (2021): "The Role of AI in Reducing Bias in Decision-Making" - Available on MIT Media Lab.

Deloitte (2023): "Scaling AI: The Next Frontier in Augmented Decision Making" - Available on Deloitte.com.

Gartner. (2023). *The state of data and analytics governance: IT leaders report mission accomplished; business leaders disagree*. Gartner. Retrieved from https://www.gartner.com/en/documents/4009109