Confidently Wrong

The Curious Case of Hallucinating Machines.

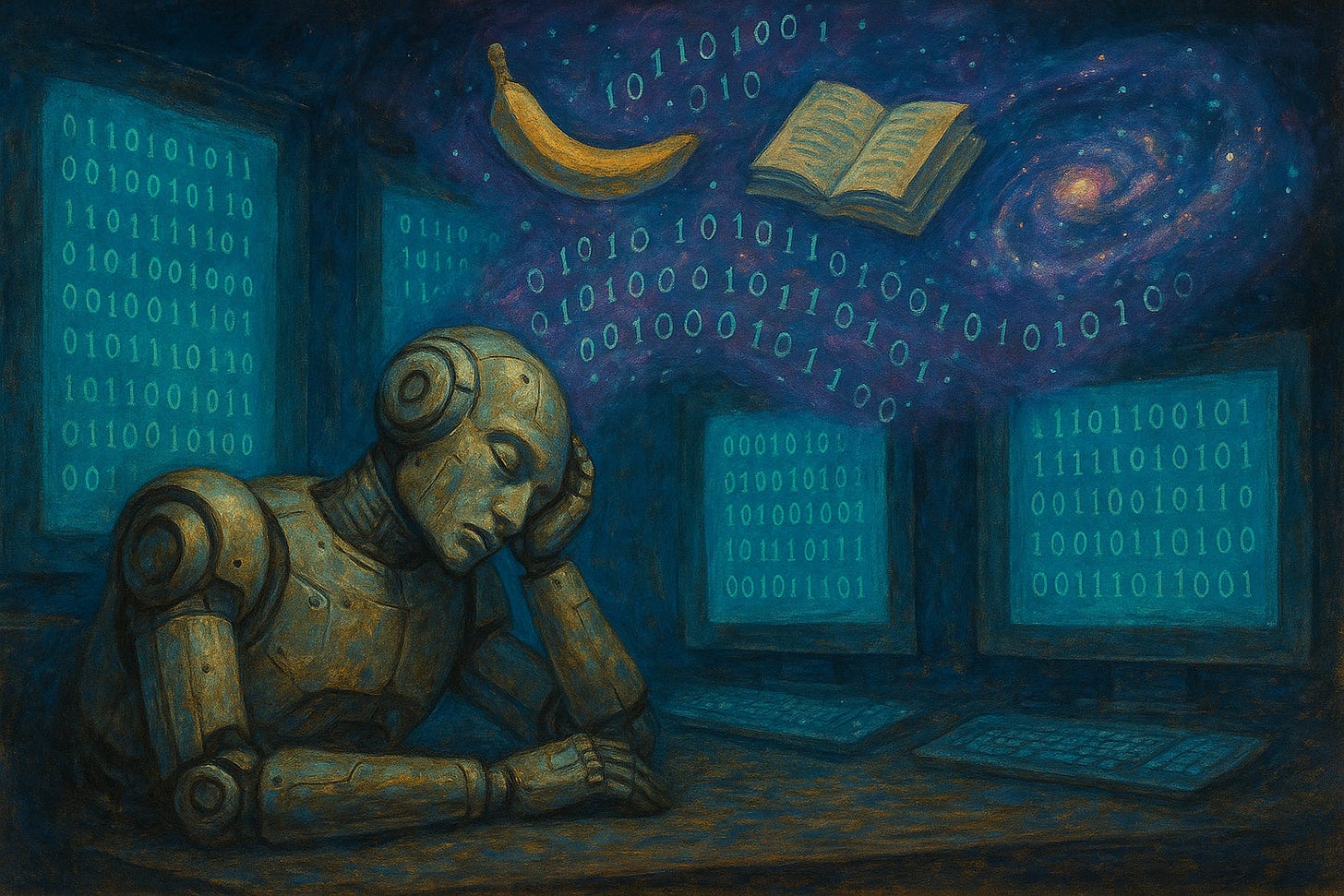

It’s 3 a.m. You’re half-asleep at your desk, watching your fine-tuned AI assistant crunch through data for a client report. Suddenly, it pings you with a notification:

“Report ready! Forecast suggests banana shortages due to emotional burnout.”

You blink. “Surely, it meant supply chain disruptions, not existential fruit crises”.

But no. You open the file. The charts are flawless. The formatting is impeccable. The logic, however, has gone on vacation. Your AI has confidently written a 12-page analysis linking banana exports to “collective human sadness.”

Congratulations. Your AI is hallucinating!

This isn’t rebellion. It’s a digital daydream! When starved of data or context, algorithms start to improvise. Like a jazz musician who’s lost the sheet music, it just keeps playing what “feels right.” And it’s utterly convinced it’s producing a masterpiece.

Why AI Hallucinates

AI hallucination isn’t the machine “going rogue”; it’s the machine doing too much thinking without enough truth.

Large Language Models (LLMs) like ChatGPT don’t actually understand the world. They don’t have beliefs, memories, or a sense of shame (lucky them). What they do have is a near-magical ability to predict the next word in a sequence based on patterns from billions of texts they’ve read.

They’re statistical parrots: astonishingly eloquent, but ultimately echoing probability, not reality.

Think of it as saying:

“I don’t know, but I’ll say something that sounds like I do.”

That’s the essence of hallucination: when confidence exceeds comprehension.

Humans do this too. We bluff in meetings. We nod during conversations about topics we barely understand. The only difference? We occasionally catch ourselves and mutter, “Actually, I might be wrong.”

AIs don’t do that, unless you tell them to.

That’s why, without proper grounding (connecting them to real-time data, verified knowledge bases, or explicit constraints), an AI will invent citations, fabricate numbers, and conjure up people who never existed, simply because the sentence structure looked promising.

Guardrails: The Algorithmic Seatbelt

If hallucination is digital daydreaming, then guardrails are the alarm clocks, the thing that keeps the dream from turning into a lawsuit

Guardrails exist to stop that. They’re the invisible bumpers that keep algorithms from swerving into misinformation traffic. Think of them as the seatbelts of machine imagination. You hope you won’t need them, but you definitely don’t want to ride without them!

Here’s how they work in practice:

Restricting behavior:

This is the digital equivalent of child-proofing the house. You tell the AI, “No politics, no medical advice, and absolutely no inventing new species of fruit.” It’s not about limiting creativity; it’s about preventing your chatbot from writing conspiracy poetry at 2 a.m.Fact-checking outputs:

Imagine your AI is writing an essay. You hand it a verified database, its textbook and say, “Everything you claim must be backed by this.” Suddenly, the model can’t just hallucinate its way to an answer. It has to justify it. This approach, known as retrieval-augmented generation (RAG), grounds the model in reality before it opens its digital mouth.Context anchoring:

Models have the memory of a goldfish. If you ask, “What’s the current inflation rate in Nigeria?” it might pull an outdated number from 2021 unless you explicitly feed it live data. Context anchoring is how we remind the AI what time it is and what world it’s operating in.Human-in-the-loop systems:

No matter how advanced the tech, someone still needs to be the adult in the room. Human reviewers can step in before an AI’s enthusiastic guesswork becomes an official report or public tweet. In other words, every AI system needs an editor, not just a power supply.

Together, these guardrails create a safety rhythm. The AI generates, checks, grounds, and waits for approval; a digital call-and-response that transforms wild imagination into responsible intelligence.

Because without guardrails, your AI is just an overconfident intern with Wi-Fi, typing at lightning speed, never taking lunch breaks, and producing absolute nonsense with perfect grammar.

Constraints: Teaching the Dreamer Discipline

Constraints are not limitations, they’re structure. They’re the fences that don’t stop creativity; they just keep it from running into traffic.

Constraints tell the AI how far it can wander before it needs to come home. They’re the invisible choreography behind every graceful move it makes.

Here’s how that looks in the real world:

Prompt engineering:

This is the art of teaching your AI how to think before it answers. You might instruct it:

“If unsure, respond with ‘I don’t know,’ or ask for clarification.”

Simple, but powerful. Suddenly your AI stops pretending to be the all-knowing oracle of the internet and starts acting like a thoughtful assistant who double-checks their facts.

Defining boundaries:

These are the lines that separate brilliance from nonsense. You might say,

“Use only data from verified sources,”

or

“Stick to policy documents from 2024 onward.”

This makes sure your AI doesn’t dig up a dusty 2016 memo and call it breaking news. Think of it as intellectual perimeter fencing.

Temperature tuning:

Every AI has a “temperature,” a setting that controls how creative (read: chaotic) it gets. A high temperature makes it sound like a poet on espresso. A low temperature makes it sound like a tax auditor. The magic lies in balance. You want the AI to be creative but not to start composing haikus in your quarterly report.

When done right, constraints don’t suffocate creativity; they refine it.

They transform the AI’s imagination from a noisy brainstorm into a focused symphony.

In fact, some of the best AI systems in the world thrive under constraint. Retrieval augmented systems, for example, must stick to facts from specific datasets. Yet within that framework, they still generate new insights, connections, and narratives, improvising intelligently rather than hallucinating confidently.

Constraints, then, are not about control. They’re about coherence. They ensure your AI dreams productively, not deliriously. Because the future doesn’t belong to machines that can imagine everything, it belongs to those that can imagine responsibly.

The Myth of Total Control

Here’s the punchline: even with the best guardrails, hallucinations never vanish completely. They just become... better dressed.

AI systems are like magicians; they work by illusion. What we call “hallucination” is sometimes where innovation hides. The trick is knowing when it’s dreaming with purpose versus making things up for applause.

Researchers now experiment with adaptive grounding, retrieval-augmented generation (RAG), and reinforcement learning with human feedback (RLHF) to keep the model tethered to reality without killing its spark. The goal isn’t to stop AI from dreaming; it’s to help it wake up responsibly.

After all, the best intelligence, whether artificial or human, tastes better when it is grounded in reality and seasoned with just the right amount of imagination.